Last week, Tesla blogger Omar (known online as Whole Mars Catalog) posted a YouTube video of his Tesla driving 50 km autonomously through heavy Los Angeles traffic with zero human interventions using Tesla’s latest Full Self-Driving (FSD) Beta software version 10.69.25.1.

The video begins in Omar’s garage. The Tesla Model 3 autonomously backs itself out of the garage, turns onto the road and then proceeds to drive itself to the precise location that Omar entered into the navigation screen.

It makes for incredible viewing. You can watch Omar’s real world view through the windscreen and compare it in real time to the 360 degree 3D rendered view seen by the Tesla on the touch screen.

To most of us, driving feels like second nature. Once we’ve decided where we’re going, our brain seems to load the “driving” program and we instinctively execute all the functions needed to get us to our destination.

Through our eyes we receive visual information from everything around us. We read traffic signs, estimate distances and speeds of other cars and check our mirrors to see what’s happening behind us. Our brains process this visual information and then like a conductor, direct our limbs to push the accelerator, the brake or turn the steering wheel as required.

For most of us, this process feels so natural and intuitive that we forget just how incredibly complex it is. Every time you drive you’re subconsciously making thousands of decisions without realising it.

This complexity is why autonomous driving has been one of the most challenging engineering problems of our age and it’s why Tesla’s latest FSD software is so astonishing in its capability.

For those who haven’t been following closely, learning about how advanced Tesla’s autonomous driving technology is may come as a surprise.

Over the past decade most companies including Google and Waymo have been attempting to solve autonomy by mapping the car’s surroundings using LiDAR or Light Detection and Ranging sensors. LiDAR sends out pulses of light which then rebound off objects back to the sensor.

The time taken for the light to return to the sensor enables the computation of object distances and relative velocities. In some ways it’s very similar to how bats navigate in the dark using sonar (sonic navigation and ranging). This approach however has hit some major limits which is why these companies aren’t even close to cracking full autonomy.

Unlike bats, humans relate to their three dimensional world through vision. It’s how we’ve been navigating for millions of years. The fundamental principle guiding Tesla’s approach to autonomy is that since humans use vision to navigate and drive, so should autonomous driving systems. Our world and in particular our road systems with their lines and signs are set up for vision not for LiDAR or any other mapping system such as sonar or radar (radio detection and ranging).

Tesla’s vision based approach to solving autonomy uses cameras and artificial intelligence to identify everything around the car in real time. Eight cameras give a 360 degree view of the car’s surroundings. Massive datasets and brain-like neural net learning enable Tesla’s autonomous software to accurately identify and map the car’s surroundings including signs, lane lines, intersections, vehicles and pedestrians.

The software is so advanced that it can differentiate between sedans, utes, trucks and buses as well as motorbikes, scooters and bicycles. It can accurately identify pedestrians, traffic cones, wheelie bins and even dogs and place them in 3D space with astonishing precision. Unlike the purely object based LiDAR system, the cameras can also identify and read traffic signage such as stop signs, traffic lights, speed limits, road works and even the arrows and symbols painted onto road surfaces.

To be able to process, learn and respond to visual information, human eyes are coupled with the human brain. With Tesla’s approach, human eyes are substituted with high-definition cameras and the brain is substituted with a state-of-the-art neural net computer running the latest version of Tesla’s FSD software.

Neural net learning is how human brains learn. As we experience more and more about our world we recognise patterns and our decisions and responses to situations become better and better. Anyone who has taught someone how to drive is familiar with this process.

The mind of someone who is just learning to play tennis is going to miss a lot of shots while the mind of a world champion is able to make shots with incredible precision and reliability. It’s all about practice, learning and data. The world champion tennis brain is trained on a dataset of millions of practice shots.

Tesla’s FSD works in the same way. In a talk from 2020 former Senior Director of Artificial Intelligence, Andrej Karpathy, outlined how Tesla has billions of km of real world driving datasets. He discussed how Tesla uses millions of images to train the software to get better and better at identifying things like stop signs. In the real world, some stop signs are obstructed with graffiti or covered with leaves. Like a human brain, the more of these edge cases are fed through the neural net algorithm, the better the software gets at correctly identifying them.

In 2020 Tesla had around 5 billion km driven in Autopilot. By mid-2022 it had ten times that figure at over 50 billion km driven in autonomous mode. As Tesla’s fleet grows exponentially, so does its dataset meaning that FSD software continues to improve at a blistering pace.

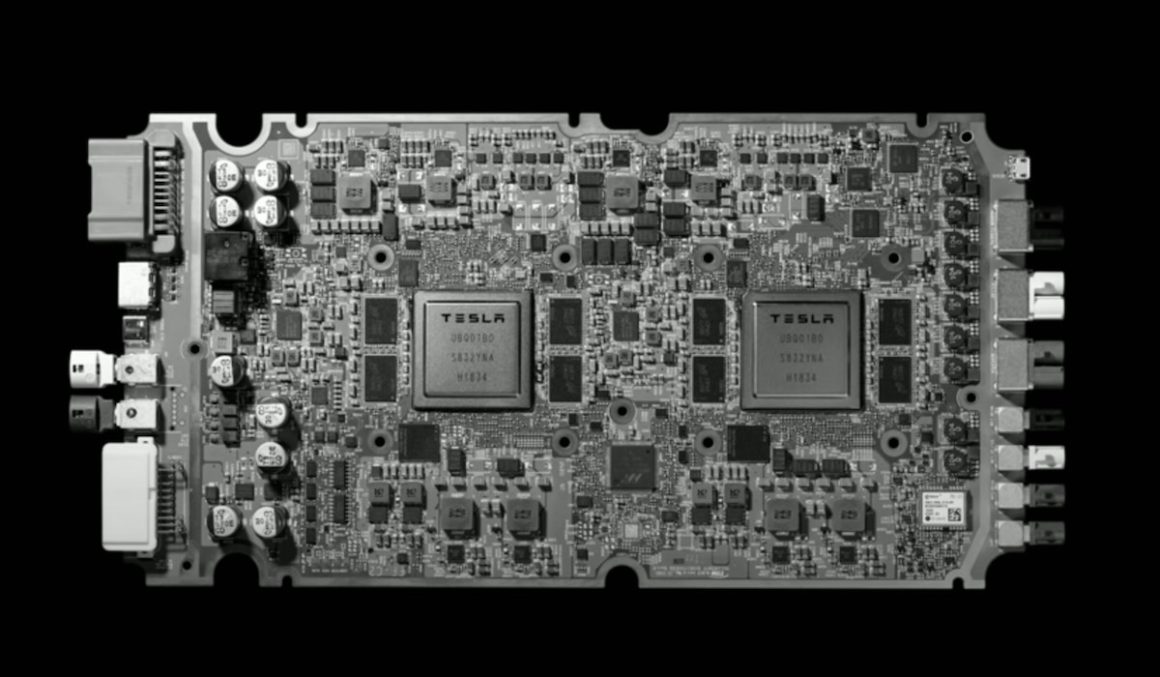

In 2019 during its Autonomy Day event, Tesla announced that it had developed a neural net optimised FSD computer called Hardware 3 and that all models from mid-2019 would include the new computer. The computer was developed in-house and has video processing speeds of 2300 frames per second (21 times faster than the next best). For context humans can only process around 60 frames per second.

This high speed processing together with 360 degree vision and highly responsive electric motors means that Tesla’s self-driving system can identify and react to imminent collisions much faster than humans can. Collision avoidance compilations on YouTube show countless examples of this technology in action.

During the event Tesla claimed that with the new computer all vehicles produced from mid-2019 onwards have the processing power to enable full autonomy at safety levels that far exceed human drivers. From mid-2019 the critical path to full autonomy was no longer a problem of on-board computing power but rather software development and with ever increasing datasets and regular over-the-air updates it was just a matter of time before this would be achieved.

In 2021 there were 2000 drivers testing the FSD beta software. By October 2022 that number had increased to 160,000 dramatically increasing the dataset and therefore accelerating the software’s learning speed even further.

In October 2022 Tesla showcased their latest FSD developments during AI Day 2 including 3 in-house built ‘Dojo’ supercomputers with 14,000 GPUs training the FSD software on 500,000 real world driving videos per day. The system processes 160 billion video frames each day!

‘Labeling’ is the process where objects (cars, signs etc) are identified and labeled in the real world driving videos used to train the system. Previously this task was done manually by Tesla employees. During the AI Day 2 event Tesla announced that its new auto-labeling training software dramatically accelerated this process enabling 5 million hours worth of manual labelling in just 12 hours. A four hundred thousand fold increase in labeling speed!

The massive expansion in training data and labelling processed by Tesla’s Dojo supercomputers combined with the full autonomy ready FSD computer and over-the-air software updates means that Tesla’s vision of full autonomy is now within reach.

If our entire fleet had such technology, road accidents, injuries and fatalities would approach zero. The US National Highway Traffic Safety Administration (NHTSA) measures frequency of road accidents using “miles driven per accident”. In Q3 2022 the US average was 652,000 miles driven per accident. For Teslas using autopilot, an accident only occurred every 6.26 million miles which is 10 times fewer accidents per mile than the US national average!

In addition to a dramatic reduction in road accidents, autonomous electric robotaxis powered by cheap renewable energy could completely change the economics of car ownership. Why spend tens of thousands of dollars on a car when you can hail a robotaxi and travel across your city for just a couple bucks?

The implications of such a future are profound. Instead of personal cars transporting just one person per day, robotaxis may move up to 20 people per day freeing up a huge amount of space in our cities currently needed for parking. A societal shift away from car ownership would also have huge implications for the 70 million unit global car market.

Testers like Whole Mars Catalog have been videoing and documenting the astonishing speed at which this technology is developing. Considering the complexity and sheer amount of variables involved, a 50km fully autonomous drive through LA traffic is truly remarkable.

If autonomous driving is already at this level in early 2023, how advanced will it be by 2025 or 2030? It’s incredibly exciting to ponder!

Daniel Bleakley is a clean technology researcher and advocate with a background in engineering and business. He has had a strong interest in electric vehicles, renewable energy, manufacturing and public policy.

Bagikan Berita Ini

0 Response to "The Rise of the Machines: Tesla drives 50km autonomously through heavy LA traffic - The Driven"

Post a Comment